Stefaan Verhulst

Paper by Gianluca Sgueo: “What will European Union (EU) decision-making look like in the next decade and beyond? Is technological progress promoting more transparent, inclusive and participatory decision-making at EU level?

Technology has dramatically changed both the number and quality of connections between citizens and public administrations. With technological progress, citizens have gained improved access to public authorities through new digital communication channels. Innovative, tech-based, approaches to policy-making have become the subject of a growing debate between academics and politicians. Theoretical approaches such as ‘CrowdLaw’, ‘Policy-Making 3.0’, ‘liquid’, ‘do-it- yourself’ or ‘technical’ democracy and ‘democratic innovations’ share the positive outlook towards technology; and technology is seen as the medium through which policies can be ‘co-created’ by decision-makers and stakeholders. Co-creation is mutually beneficial. Decision-makers gain legitimacy by incorporating the skills, knowledge and expertise of citizens, who in turn have the opportunity to shape new policies according to their needs and expectations.

EU institutions are at the forefront of experimentation with technologically innovative approaches to make decision-making more transparent and accessible to stakeholders. Efforts in modernising EU participatory channels through technology have evolved over time: from redressing criticism on democratic deficits, through fostering digital interactions with stakeholders, up to current attempts at designing policy-making in a friendly and participative manner.

While technological innovation holds the promise of making EU policy-making even more participatory, it is not without challenges. To begin with, technology is resource consuming. There are legal challenges associated with both over- and under-regulation of the use of technology in policy-making. Furthermore, technological innovation raises ethical concerns. It may increase inequality, for instance, or infringe personal privacy… (More)“.

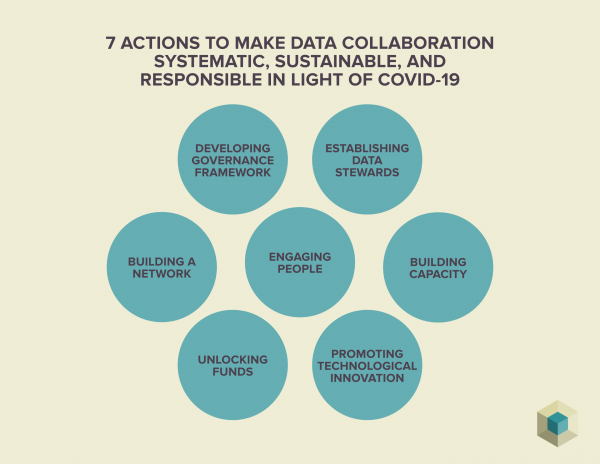

CALL FOR ACTION: “The spread of COVID-19 is a human tragedy and a worldwide crisis. The social and economic costs are huge, and they are contributing to a global slowdown. Despite the amount of data collected daily, we have not been able to leverage them to accelerate our understanding and action to counter COVID-19. As a result we have entered a global state of profound uncertainty and anxiety.

The current pandemic has not only shown vulnerabilities in our public health systems but has also made visible our failure to re-use data between the public and private sectors — what we call data collaboratives — to inform decision makers how to fight dynamic threats like the novel Coronavirus.

We have known for years that the re-use of aggregated and anonymized data — including from telecommunications, social media, and satellite feeds — can improve traditional models for tracking disease propagation. Telecommunications data has, for instance, been re-used to support the response to Ebola in Africa (Orange) and swine flu in Mexico (Telefónica). Social media data has been re-used to understand public perceptions around Zika in Brazil (Facebook). Satellite data has been used to track seasonal measles in Niger using nighttime lights. Geospatial data has similarly supported malaria surveillance and eradication efforts in Sub-Saharan Africa. In general, many infectious diseases have been monitored using mobile phones and mobility.

The potential and realized contributions of these and other data collaboratives reveal that the supply of and demand for data and data expertise are widely dispersed. They are spread across government, the private sector, and civil society and often poorly matched.

Much data needed by researchers is never made accessible to those who could productively put it to use while much data that is released is never used in a systematic and sustainable way during and post crisis.

This failure results in tremendous inefficiencies and costly delays in how we respond. It means lost opportunities to save lives and a persistent lack of preparation for future threats….(More)”. SIGN AND JOIN HERE.

About: “The Coronavirus Tech Handbook provides a space for technologists, specialists, civic organisations and public & private institutions to collaborate on a rapid and sophisticated response to the coronavirus outbreak. It is an active and evolving resource with thousands of expert contributors.

In less than two weeks it has grown to cover areas including:

- Detailed guidance for doctors and nurses,

- Advice and tools for educators adjusting to remote teaching,

- Community of open-source ventilator designers

- Comprehensive data and models for forecasting the spread of the virus.

Coronavirus Tech Handbook’s goal is to create a rapidly evolving open source technical knowledge base that will help all institutions across civil society and the public sector collaborate to fight the outbreak.

Coronavirus Tech Handbook is not a place for the public to get advice, but a place for specialists to collaborate and make sure the best solutions are quickly shared and deployed….(More)”.

Article by Evan Nicole Brown: “… There’s currently no cure for COVID-19, but scientists are working on drugs that could help slow its spread. Fortunately, citizens can get involved in the process.

Foldit is an online video game that challenges players to fold various proteins into shapes where they are stable. Generally, folding proteins allows scientists (and citizens) to design new proteins from scratch, but in the case of coronavirus, Foldit players are trying to design the drugs to combat it. “Coronavirus has a ‘spike’ protein that it uses to recognize human cells,” says Brian Koepnick, a biochemist and researcher with the University of Washington’s Institute for Protein Design who has been using Foldit for protein research for six years. “Foldit players are designing new protein drugs that can bind to the COVID spike and block this recognition, [which could] potentially stop the virus from infecting more cells in an individual who has already been exposed to the virus.”

“In Foldit, you change the shape of a protein model to optimize your score. This score is actually a sophisticated calculation of the fold’s potential energy,” says Koepnick, adding that professional researchers use an identical score function in their work. “The coronavirus puzzles are set up such that high-scoring models have a better chance of actually binding to the target spike protein.” Ultimately, high-scoring solutions are analyzed by researchers and considered for real-world use….(More)”.

Farah Qaiser at Forbes: “A new study out in the PLOS Computational Biology journal shows that public attention in the midst of the Zika virus epidemic was largely driven by media coverage, rather than the epidemic’s magnitude or extent, highlighting the importance of mass media coverage when it comes to public health. This is reflected in the ongoing COVID-19 situation, where to date, the main 2019–20 coronavirus pandemic Wikipedia page has over ten million page views.

The 2015-2016 Zika virus epidemic began in Northeastern Brazil, and spread across South and North America. The Zika virus was largely spread by infected Aedes mosquitoes, where symptoms included a fever, headache, itching, and muscle pain. It could also be transmitted between pregnant women and their fetuses, causing microcephaly, where a baby’s head was much smaller than expected.

Similar to the ongoing COVID-19 situation, the media coverage around the Zika virus epidemic shaped public opinion and awareness.

“We knew that it was relevant, and very important, for public health to understand how the media and news shapes the attention of [the] public during epidemic outbreaks,” says Michele Tizzoni, a principal investigator based at the Institute for Scientific Interchange (ISI) Foundation. …

Today, the 2019–20 coronavirus pandemic Wikipedia page has around ten million page views. As per Toby Negrin, the Wikimedia Foundation’s Chief Product Officer, this page has been edited over 12K times by nearly 1,900 different editors. The page is currently semi-protected – a common practice for Wikipedia pages that are relevant to current news stories.

In an email, Negrin shared that “the day after the World Health Organization classified COVID-19 as a pandemic on March 11th, the main English Wikipedia article about the pandemic had nearly 1.1 million views, an increase of nearly 30% from the day before the WHO’s announcement (on March 10th, it had just over 809,000 views).” This is similar to the peaks in Wikipedia attention observed when official announcements took place during the Zika virus epidemic.

In addition, initial data from Tizzoni’s research group shows that the lockdown in Italy has resulted in a 50% or more decrease in movement between provinces. Similarly, Negrin notes that since the national lockdown in Italy, “total pageviews from Italy to all Wikimedia projects increased by nearly 30% over where they were at the same time last year.”

With increased public awareness during epidemics, tackling misinformation is critical. This remains important at Wikipedia.

“When it comes to documenting current events on Wikipedia, volunteers take even greater care to get the facts right,” stated Negrin, and pointed out that there is a page dedicated to misinformation during this pandemic, which has received over half a million views….(More)”.

Essay by Oren Perez: “This article focuses on “deliberative e-rulemaking”: digital consultation processes that seek to facilitate public deliberation over policy or regulatory proposals [1, 2]. The main challenge of е-rulemaking platforms is to support an “intelligent” deliberative process that enables decision makers to identify a wide range of options, weigh the relevant considerations, and develop epistemically responsible solutions.

This article discusses and critiques two approaches to this challenge: The Cornell Regulation Room project and model of computationally assisted regulatory participation by Livermore et al. It then proceeds to explore two alternative approaches to e-rulemaking: One is based on the implementation of collaborative, wiki-styled tools. This article discusses the findings of an experiment, which was conducted at Bar-Ilan University and explored various aspects of a wiki-based collaborative е-rulemaking system. The second approach follows a more futuristic Approach, focusing on the potential development of autonomous, artificial democratic agents. This article critically discusses this alternative, also in view of the recent debate regarding the idea of “augmented democracy.”…(More)”.

Paper by Nathalie A. Smuha: “This paper discusses the establishment of a governance framework to secure the development and deployment of “good AI”, and describes the quest for a morally objective compass to steer it. Asserting that human rights can provide such compass, this paper first examines what a human rights-based approach to AI governance entails, and sets out the promise it propagates. Subsequently, it examines the pitfalls associated with human rights, particularly focusing on the criticism that these rights may be too Western, too individualistic, too narrow in scope and too abstract to form the basis of sound AI governance. After rebutting these reproaches, a plea is made to move beyond the calls for a human rights-based approach, and start taking the necessary steps to attain its realisation. It is argued that, without elucidating the applicability and enforceability of human rights in the context of AI; adopting legal rules that concretise those rights where appropriate; enhancing existing enforcement mechanisms; and securing an underlying societal infrastructure that enables human rights in the first place, any human rights-based governance framework for AI risks falling short of its purpose….(More)”.

Paper by J.F. Landy and Leonid Tiokhin: “To what extent are research results influenced by subjective decisions that scientists make as they design studies?

Fifteen research teams independently designed studies to answer five original research questions related to moral judgments, negotiations, and implicit cognition. Participants from two separate large samples (total N > 15,000) were then randomly assigned to complete one version of each study. Effect sizes varied dramatically across different sets of materials designed to test the same hypothesis: materials from different teams rendered statistically significant effects in opposite directions for four out of five hypotheses, with the narrowest range in estimates being d = -0.37 to +0.26. Meta-analysis and a Bayesian perspective on the results revealed overall support for two hypotheses, and a lack of support for three hypotheses.

Overall, practically none of the variability in effect sizes was attributable to the skill of the research team in designing materials, while considerable variability was attributable to the hypothesis being tested. In a forecasting survey, predictions of other scientists were significantly correlated with study results, both across and within hypotheses. Crowdsourced testing of research hypotheses helps reveal the true consistency of empirical support for a scientific claim….(More)”.

Kenan Malik at The Guardian: “The selfish gene. The Big Bang. The greenhouse effect. Metaphors are at the heart of scientific thinking. They provide the means for both scientists and non-scientists to understand, think through and talk about abstract ideas in terms of more familiar objects or phenomena.

But if metaphors can illuminate, they can also constrain. In his new book, The Idea of the Brain, zoologist and historian Matthew Cobb tells the story of how scientists and philosophers have tried to understand the brain and how it works. In every age, Cobb shows, people have thought about the brain largely in terms of metaphors, drawn usually from the most exciting technology of the day, whether clocks or telephone exchanges or the contemporary obsession with computers. The brain, Cobb observes, “is more like a computer than like a clock”, but “even the simplest animal brain is not a computer like anything we have built, nor one we can yet envisage”.

Metaphors allow “insight and discovery” but are “inevitably partial” and “there will come a point when the understanding they allow will be outweighed by the limits they impose”. We may, Cobb suggests, be at that point in picturing the brain as a computer.

The paradox of neuroscience today is that we possess an unprecedented amount of data about the brain but barely a glimmer of a theory to explain how it works. Indeed, as the French neuroscientist Yves Frégnac has put it, making ample use of metaphor, it can feel as if “we are drowning in a flood of information” and that “all sense of global understanding [of brain function] is in acute danger of being washed away”.

It’s not just in science that metaphors are significant in shaping the ways in which we think. In 1980, the linguist George Lakoff and philosopher Mark Johnson set off the modern debate on this issue with their seminal work, Metaphors We Live By. Metaphors, they argued, are not linguistic flourishes but the fundamental building blocks of thought. We don’t simply talk or write with metaphors, we also think with them….(More)”

Opinion by Todd Rogers: “…As a behavioral scientist, I study how people make decisions and process information, and I develop communications to change behavior for the better. And if there’s one lesson all the coronavirus email writers should take, it’s this: Messages should be as easy to understand as possible. This is difficult in normal times — and is no doubt much more so with facts on the ground changing as rapidly as they are….

As an illustration of how potent simplifying messaging can be, Carly Robinson at Harvard, Jessica Lasky-Fink of the University of California, Berkeley, Hedy Chang of Attendance Works and I conducted an experiment with a large school district, in which we rewrote a state-required notification about attendance.All schools in California are required to send a truancy notification to families after a student is late or absent three times. The state legislature offered recommended language for the notice that was written at a college-reading level and contained 342 words in seven-point font. We rewrote the letter at a 5th grade reading level, in 14-point font and with half as many words. We then randomly assigned 131,312 families to either receive the state-recommended language or a version of our simplified letter.The best version of our simplified letters was an estimated 40% more effective at reducing absences during the subsequent 30 days than the state-recommended language. Writing with an understanding of how humans work turns out to be more effective than writing with the sole goal of complying with the delivery of mandatory written information.So, what can be done to make coronavirus messages, so critical to the functioning of our country right now, easier to understand — and more likely to be read?

- Write in the most accessible way possible. Use the Flesch-Kincaid readability test (built into Microsoft Word and Google Docs) to test the reading-level complexity of your writing.

- Use as few words as possible. Shorter messages are more likely to be read (see the long email in your inbox from three months ago that you still have not read).

- Write in a larger font. This makes long messages look ridiculous and makes it easier to read for recipients with eyesight issues. It also reduces the chance of the accidental — but way too common — occurrence of emails appearing in inboxes with absurdly small font.

- Eliminate gratuitous borders and images. These can often distract from the message you are trying to send.

- Use a clear structure. People skim, so help them. As opposed to a multi-paragraph email written in normal prose, consider categorizing information under headings like, “What we want you to know” (or just “KNOW”) and “what we would like you to do” (or, concisely, “DO”). Consider putting content within each category in bullet points….(More)”