Paper by Peter Stone et al: “In September 2016, Stanford’s “One Hundred Year Study on Artificial Intelligence” project (AI100) issued the first report of its planned long-term periodic assessment of artificial intelligence (AI) and its impact on society. It was written by a panel of 17 study authors, each of whom is deeply rooted in AI research, chaired by Peter Stone of the University of Texas at Austin. The report, entitled “Artificial Intelligence and Life in 2030,” examines eight domains of typical urban settings on which AI is likely to have impact over the coming years: transportation, home and service robots, healthcare, education, public safety and security, low-resource communities, employment and workplace, and entertainment. It aims to provide the general public with a scientifically and technologically accurate portrayal of the current state of AI and its potential and to help guide decisions in industry and governments, as well as to inform research and development in the field. The charge for this report was given to the panel by the AI100 Standing Committee, chaired by Barbara Grosz of Harvard University….(More)”.

The political imaginary of National AI Strategies

Paper by Guy Paltieli: “In the past few years, several democratic governments have published their National AI Strategies (NASs). These documents outline how AI technology should be implemented in the public sector and explain the policies that will ensure the ethical use of personal data. In this article, I examine these documents as political texts and reconstruct the political imaginary that underlies them. I argue that these documents intervene in contemporary democratic politics by suggesting that AI can help democracies overcome some of the challenges they are facing. To achieve this, NASs use different kinds of imaginaries—democratic, sociotechnical and data—that help citizens envision how a future AI democracy might look like. As part of this collective effort, a new kind of relationship between citizens and governments is formed. Citizens are seen as autonomous data subjects, but at the same time, they are expected to share their personal data for the common good. As a result, I argue, a new kind of political imaginary is developed in these documents. One that maintains a human-centric approach while championing a vision of collective sovereignty over data. This kind of political imaginary can become useful in understanding the roles of citizens and governments in this technological age….(More)”.

Algorithms Quietly Run the City of DC—and Maybe Your Hometown

Article by Khari Johnson: “Washington, DC, IS the home base of the most powerful government on earth. It’s also home to 690,000 people—and 29 obscure algorithms that shape their lives. City agencies use automation to screen housing applicants, predict criminal recidivism, identify food assistance fraud, determine if a high schooler is likely to drop out, inform sentencing decisions for young people, and many other things.

That snapshot of semiautomated urban life comes from a new report from the Electronic Privacy Information Center (EPIC). The nonprofit spent 14 months investigating the city’s use of algorithms and found they were used across 20 agencies, with more than a third deployed in policing or criminal justice. For many systems, city agencies would not provide full details of how their technology worked or was used. The project team concluded that the city is likely using still more algorithms that they were not able to uncover.

The findings are notable beyond DC because they add to the evidence that many cities have quietly put bureaucratic algorithms to work across their departments, where they can contribute to decisions that affect citizens’ lives.

Government agencies often turn to automation in hopes of adding efficiency or objectivity to bureaucratic processes, but it’s often difficult for citizens to know they are at work, and some systems have been found to discriminate and lead to decisions that ruin human lives. In Michigan, an unemployment-fraud detection algorithm with a 93 percent error rate caused 40,000 false fraud allegations. A 2020 analysis by Stanford University and New York University found that nearly half of federal agencies are using some form of automated decisionmaking systems…(More)”.

Artificial intelligence in government: Concepts, standards, and a unified framework

Paper by Vincent J. Straub, Deborah Morgan, Jonathan Bright, Helen Margetts: “Recent advances in artificial intelligence (AI) and machine learning (ML) hold the promise of improving government. Given the advanced capabilities of AI applications, it is critical that these are embedded using standard operational procedures, clear epistemic criteria, and behave in alignment with the normative expectations of society. Scholars in multiple domains have subsequently begun to conceptualize the different forms that AI systems may take, highlighting both their potential benefits and pitfalls. However, the literature remains fragmented, with researchers in social science disciplines like public administration and political science, and the fast-moving fields of AI, ML, and robotics, all developing concepts in relative isolation. Although there are calls to formalize the emerging study of AI in government, a balanced account that captures the full breadth of theoretical perspectives needed to understand the consequences of embedding AI into a public sector context is lacking. Here, we unify efforts across social and technical disciplines by using concept mapping to identify 107 different terms used in the multidisciplinary study of AI. We inductively sort these into three distinct semantic groups, which we label the (a) operational, (b) epistemic, and (c) normative domains. We then build on the results of this mapping exercise by proposing three new multifaceted concepts to study AI-based systems for government (AI-GOV) in an integrated, forward-looking way, which we call (1) operational fitness, (2) epistemic completeness, and (3) normative salience. Finally, we put these concepts to work by using them as dimensions in a conceptual typology of AI-GOV and connecting each with emerging AI technical measurement standards to encourage operationalization, foster cross-disciplinary dialogue, and stimulate debate among those aiming to reshape public administration with AI…(More)”.

Sex and Gender Bias in Technology and Artificial Intelligence

Book edited by Davide Cirillo, Silvina Catuara Solarz, and Emre Guney: “…details the integration of sex and gender as critical factors in innovative technologies (artificial intelligence, digital medicine, natural language processing, robotics) for biomedicine and healthcare applications. By systematically reviewing existing scientific literature, a multidisciplinary group of international experts analyze diverse aspects of the complex relationship between sex and gender, health and technology, providing a perspective overview of the pressing need of an ethically-informed science. The reader is guided through the latest implementations and insights in technological areas of accelerated growth, putting forward the neglected and overlooked aspects of sex and gender in biomedical research and healthcare solutions that leverage artificial intelligence, biosensors, and personalized medicine approaches to predict and prevent disease outcomes. The reader comes away with a critical understanding of this fundamental issue for the sake of better future technologies and more effective clinical approaches….(More)”.

Could an algorithm predict the next pandemic?

Article by Simon Makin: “Leap is a machine-learning algorithm that uses sequence data to classify influenza viruses as either avian or human. The model had been trained on a huge number of influenza genomes — including examples of H5N8 — to learn the differences between those that infect people and those that infect birds. But the model had never seen an H5N8 virus categorized as human, and Carlson was curious to see what it made of this new subtype.

Somewhat surprisingly, the model identified it as human with 99.7% confidence. Rather than simply reiterating patterns in its training data, such as the fact that H5N8 viruses do not typically infect people, the model seemed to have inferred some biological signature of compatibility with humans. “It’s stunning that the model worked,” says Carlson. “But it’s one data point; it would be more stunning if I could do it a thousand more times.”

The zoonotic process of viruses jumping from wildlife to people causes most pandemics. As climate change and human encroachment on animal habitats increase the frequency of these events, understanding zoonoses is crucial to efforts to prevent pandemics, or at least to be better prepared.

Researchers estimate that around 1% of the mammalian viruses on the planet have been identified1, so some scientists have attempted to expand our knowledge of this global virome by sampling wildlife. This is a huge task, but over the past decade or so, a new discipline has emerged — one in which researchers use statistical models and machine learning to predict aspects of disease emergence, such as global hotspots, likely animal hosts or the ability of a particular virus to infect humans. Advocates of such ‘zoonotic risk prediction’ technology argue that it will allow us to better target surveillance to the right areas and situations, and guide the development of vaccines and therapeutics that are most likely to be needed.

However, some researchers are sceptical of the ability of predictive technology to cope with the scale and ever-changing nature of the virome. Efforts to improve the models and the data they rely on are under way, but these tools will need to be a part of a broader effort if they are to mitigate future pandemics…(More)”.

The Proof is in the Pudding: Revealing the SDGs with Artificial Intelligence

AFD Research Paper: “The use of frontier technologies in the field of sustainability is likely to accompany its visibility, and the quality of information available to decision makers. This paper explores the possibility of using artificial intelligence to analyze Public Development Banks’ annual reports…(More)”.

Responsible AI licenses: a practical tool for implementing the OECD Principles for Trustworthy AI

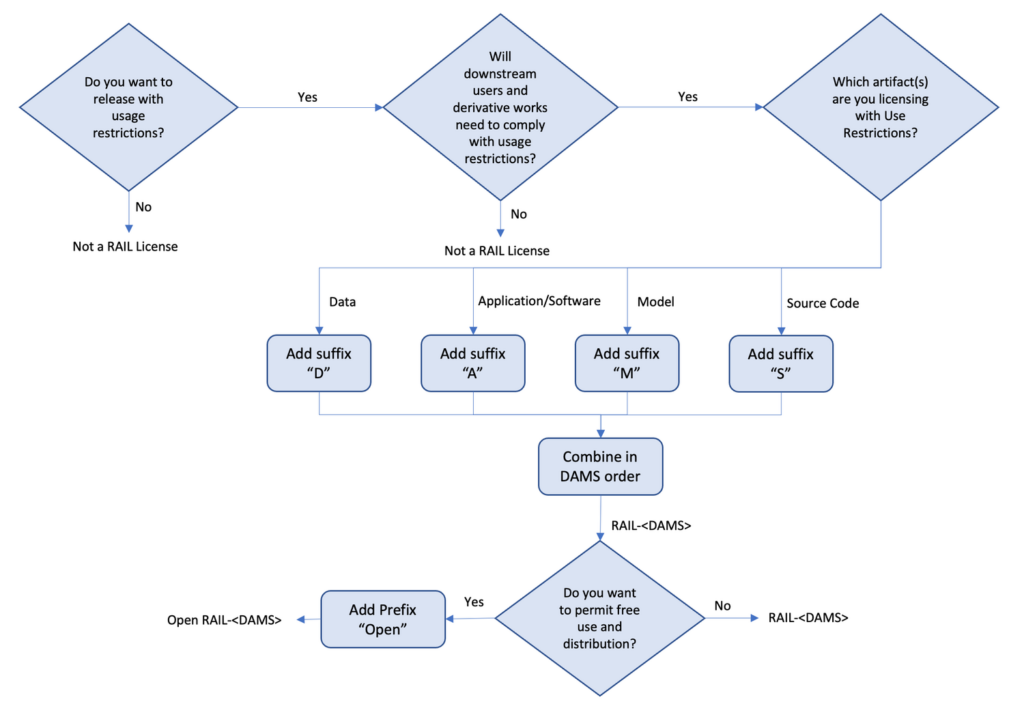

Article by Carlos Muñoz Ferrandis: “Recent socio-ethical concerns on the development, use, and commercialization of AI-related products and services have led to the emergence of new types of licenses devoted to promoting the responsible use of AI systems: Responsible AI Licenses, or RAILs.

RAILs are AI-specific licenses that include restrictions on how the licensee can use the AI feature due to the licensor’s concerns about the technical capabilities and limitations of the AI feature. This approach concerns the two existing types of these licenses. The RAIL license can be used for ML models, source code, applications and services, and data. When these licenses allow free access and flexible downstream distribution of the licensed AI feature, they are OpenRAIL

The RAIL Initiative was created in 2019 to encourage the industry to adopt use restrictions in licenses as a way to mitigate the risks of misuse and potential harm caused by AI systems…(More)”.

How Confucianism could put fear about Artificial Intelligence to bed

Article by Tom Cassauwers: “Western culture has had a long history of individualism, warlike use of technology, Christian apocalyptic thinking and a strong binary between body and soul. These elements might explain the West’s obsession with the technological apocalypse and its opposite: techno-utopianism. In Asia, it’s now common to explain China’s dramatic rise as a leader in AI and robotics as a consequence of state support from the world’s largest economy. But what if — in addition to the massive state investment — China and other Asian nations have another advantage, in the form of Eastern philosophies?

There’s a growing view among independent researchers and philosophers that Confucianism and Buddhism could offer healthy alternative perspectives on the future of technology. And with AI and robots rapidly increasing in importance across industries, it’s time for the West to turn to the East for answers…

So what would a non-Western way of thinking about tech look like? First, there might be a different interpretation of personhood. Both Confucianism and Buddhism potentially open up the way for nonhumans to reach the status of humans. In Confucianism, the state of reaching personhood “is not a given. You need to work to achieve it,” says Wong. The person’s attitude toward certain ethical virtues determines whether or not they reach the status of a human. That also means that “we can attribute personhood to nonhuman things like robots when they play ethically relevant roles and duties as humans,” Wong adds.

Buddhism offers a similar argument, where robots can hypothetically achieve a state of enlightenment, which is present everywhere, not only in humans — an argument made as early as the 1970s by Japanese roboticist Masahiro Mori. It may not be a coincidence that robots enjoy some of their highest social acceptance in Japan, with its Buddhist heritage. “Westerners are generally reluctant about the nature of robotics and AI, considering only humans as true beings, while Easterners more often consider devices as similar to humans,” says Jordi Vallverdú, a professor of philosophy at the Autonomous University of Barcelona….(More)”

The Exploited Labor Behind Artificial Intelligence

Essay by Adrienne Williams, Milagros Miceli, and Timnit Gebru: “The public’s understanding of artificial intelligence (AI) is largely shaped by pop culture — by blockbuster movies like “The Terminator” and their doomsday scenarios of machines going rogue and destroying humanity. This kind of AI narrative is also what grabs the attention of news outlets: a Google engineer claiming that its chatbot was sentient was among the most discussed AI-related news in recent months, even reaching Stephen Colbert’s millions of viewers. But the idea of superintelligent machines with their own agency and decision-making power is not only far from reality — it distracts us from the real risks to human lives surrounding the development and deployment of AI systems. While the public is distracted by the specter of nonexistent sentient machines, an army of precarized workers stands behind the supposed accomplishments of artificial intelligence systems today.

Many of these systems are developed by multinational corporations located in Silicon Valley, which have been consolidating power at a scale that, journalist Gideon Lewis-Kraus notes, is likely unprecedented in human history. They are striving to create autonomous systems that can one day perform all of the tasks that people can do and more, without the required salaries, benefits or other costs associated with employing humans. While this corporate executives’ utopia is far from reality, the march to attempt its realization has created a global underclass, performing what anthropologist Mary L. Gray and computational social scientist Siddharth Suri call ghost work: the downplayed human labor driving “AI”.

Tech companies that have branded themselves “AI first” depend on heavily surveilled gig workers like data labelers, delivery drivers and content moderators. Startups are even hiring people to impersonate AI systems like chatbots, due to the pressure by venture capitalists to incorporate so-called AI into their products. In fact, London-based venture capital firm MMC Ventures surveyed 2,830 AI startups in the EU and found that 40% of them didn’t use AI in a meaningful way…(More)”.