Adam Mazmanian in FCW:’ A year after the launch of the government’s digital strategy, there’s no official tally of the economic activity generated by the release of government datasets for use in commercial applications.

“We have anecdotal examples, but nothing official yet,” said federal CIO Steven VanRoekel in an invitation-only meeting with reporters at the FOSE conference on May 15. “It’s an area where we have an opportunity to start to talk about this, because it’s starting to tick up a bit, and the numbers are looking pretty good.” (Related story: APIs help agencies say yes)…

The Obama administration is banking on an explosion in the use of federal datasets for commercial and government applications alike. Last week’s executive order and accompanying directive from the Office of Management and Budget tasks agencies with making open and machine readable data the new default setting for government information.

VanRoekel said that the merits of the open data standard don’t necessarily need to be justified by economic activity….

The executive order also spells out privacy concerns arising from the so-called “mosaic effect,’ by which information from disparate datasets can be overlaid to decipher personally identifiable information.”

OpenData Latinoamérica

Mariano Blejman and Miguel Paz @ IJNet Blog: “We need a central repository where you can share the data that you have proved to be reliable. Our answer to this need: OpenData Latinoamérica, which we are leading as ICFJ Knight International Journalism Fellows.

Inspired by the open data portal created by ICFJ Knight International Journalism Fellow Justin Arenstein in Africa, OpenData Latinoamérica aims to improve the use of data in this region where data sets too often fail to show up where they should, and when they do, are scattered about the web at governmental repositories and multiple independent repositories where the data is removed too quickly.

…

The portal will be used at two big upcoming events: Bolivia’s first DataBootCamp and the Conferencia de Datos Abiertos (Open Data Conference) in Montevideo, Uruguay. Then, we’ll hold a series of hackathons and scrape-athons in Chile, which is in a period of presidential elections in which citizens increasingly demand greater transparency. Releasing data and developing applications for accountability will be the key.”

Challenge: Visualizing Online Takedown Requests

“The free flow of information defines the Internet. Innovations like Wikipedia and crowdsourcing owe their existence to and are powered by the resulting streams of knowledge and ideas. Indeed, more information means more choice, more freedom, and ultimately more power for the individual and society. But — citing reasons like defamation, national security, and copyright infringement — governments, corporations, and other organizations at times may regulate and restrict information online. By blocking or filtering sites, issuing court orders limiting access to information, enacting legislation or pressuring technology and communication companies, governments and other organizations aim to censor one of the most important means of free expression in the world. What does this mean and to what extent should attempts to censor online content be permitted?…

“The free flow of information defines the Internet. Innovations like Wikipedia and crowdsourcing owe their existence to and are powered by the resulting streams of knowledge and ideas. Indeed, more information means more choice, more freedom, and ultimately more power for the individual and society. But — citing reasons like defamation, national security, and copyright infringement — governments, corporations, and other organizations at times may regulate and restrict information online. By blocking or filtering sites, issuing court orders limiting access to information, enacting legislation or pressuring technology and communication companies, governments and other organizations aim to censor one of the most important means of free expression in the world. What does this mean and to what extent should attempts to censor online content be permitted?…We challenge you to visualize the removal requests in Google’s Transparency Report. What in this data should be communicated to the general public? Are there any trends or patterns in types of requests that have been complied with? Have legal and policy environments shaped what information is available and/or restricted in different countries? The data set on government requests (~1 thousand rows) provides summaries broken down by country, Google product, and reason. The data set on copyright requests, however, is much larger (~1 million rows) and includes each individual request. Use one or both data sets, by themselves or with other open data sets. We’re excited to partner with Google for this challenge, and we’re offering $5,000 in prizes.”

Enter

Deadline: Thursday, June 27, 2013, 11:59 pm EDT

Winner Announced: Thursday, July 11, 2013

More at http://visualizing.org/contests/visualizing-online-takedown-requests

New Open Data Executive Order and Policy

The White House: “The Obama Administration today took groundbreaking new steps to make information generated and stored by the Federal Government more open and accessible to innovators and the public, to fuel entrepreneurship and economic growth while increasing government transparency and efficiency.

Today’s actions—including an Executive Order signed by the President and an Open Data Policy released by the Office of Management and Budget and the Office of Science and Technology Policy—declare that information is a valuable national asset whose value is multiplied when it is made easily accessible to the public. The Executive Order requires that, going forward, data generated by the government be made available in open, machine-readable formats, while appropriately safeguarding privacy, confidentiality, and security.

The move will make troves of previously inaccessible or unmanageable data easily available to entrepreneurs, researchers, and others who can use those files to generate new products and services, build businesses, and create jobs….

Along with the Executive Order and Open Data Policy, the Administration announced a series of complementary actions:

• A new Data.Gov. In the months ahead, Data.gov, the powerful central hub for open government data, will launch new services that include improved visualization, mapping tools, better context to help locate and understand these data, and robust Application Programming Interface (API) access for developers.

• New open source tools to make data more open and accessible. The US Chief Information Officer and the US Chief Technology Officer are releasing free, open source tools on Github, a site that allows communities of developers to collaboratively develop solutions. This effort, known as Project Open Data, can accelerate the adoption of open data practices by providing plug-and-play tools and best practices to help agencies improve the management and release of open data. For example, one tool released today automatically converts simple spreadsheets and databases into APIs for easier consumption by developers. Anyone, from government agencies to private citizens to local governments and for-profit companies, can freely use and adapt these tools starting immediately.

• Building a 21st century digital government. As part of the Administration’s Digital Government Strategy and Open Data Initiatives in health, energy, education, public safety, finance, and global development, agencies have been working to unlock data from the vaults of government, while continuing to protect privacy and national security. Newly available or improved data sets from these initiatives will be released today and over the coming weeks as part of the one year anniversary of the Digital Government Strategy.

• Continued engagement with entrepreneurs and innovators to leverage government data. The Administration has convened and will continue to bring together companies, organizations, and civil society for a variety of summits to highlight how these innovators use open data to positively impact the public and address important national challenges. In June, Federal agencies will participate in the fourth annual Health Datapalooza, hosted by the nonprofit Health Data Consortium, which will bring together more than 1,800 entrepreneurs, innovators, clinicians, patient advocates, and policymakers for information sessions, presentations, and “code-a-thons” focused on how the power of data can be harnessed to help save lives and improve healthcare for all Americans.

For more information on open data highlights across government visit: http://www.whitehouse.gov/administration/eop/ostp/library/docsreports”

The Uncertain Relationship Between Open Data and Accountability

Tiago Peixoto’s Response to Yu and Robinson’s paper on The New Ambiguity of “ Open Government ”: “By looking at the nature of data that may be disclosed by governments, Harlan Yu and David Robinson provide an analytical framework that evinces the ambiguities underlying the term “open government data.” While agreeing with their core analysis, I contend that the authors ignore the enabling conditions under which transparency may lead to accountability, notably the publicity and political agency conditions. I argue that the authors also overlook the role of participatory mechanisms as an essential element in unlocking the potential for open data to produce better government decisions and policies. Finally, I conduct an empirical analysis of the publicity and political agency conditions in countries that have launched open data efforts, highlighting the challenges associated with open data as a path to accountability.”

6 Things You May Not Know About Open Data

GovTech: “On Friday, May 3, Palo Alto, Calif., CIO Jonathan Reichental …said that when it comes to making data more open, “The invisible becomes visible,” and he outlined six major points that identify and define what open data really is:

1. It’s the liberation of peoples’ data

The public sector collects data that pertains to government, such as employee salaries, trees or street information, and government entities are therefore responsible for liberating that data so the constituent can view it in an accessible format. Though this practice has become more commonplace in recent years, Reichental said government should have been doing this all along.

2. Data has to be consumable by a machine

Piecing data together from a spreadsheet to a website or containing it in a PDF isn’t the easiest way to retrieve data. To make data more open, in needs to be in a readable format so users don’t have to go through additional trouble of finding or reading it.

3. Data has a derivative value

When data is made available to the public, people like app developers, arichitects or others are able to analyze the data. In some cases, data can be used in city planning to understand what’s happening at the city scale.

4. It eliminates the middleman

For many states, public records laws require them to provide data when a public records request is made. But oftentimes, complying with such request regulations involves long and cumbersome processes. Lawyers and other government officials must process paperwork, and it can take weeks to complete a request. By having data readily available, these processes can be eliminated, thus also eliminating the middleman responsible for processing the requests. Direct access to the data saves time and resources.

5. Data creates deeper accountability

Since government is expected to provide accessible data, it is therefore being watched, making it more accountable for its actions — everything from emails, salaries and city council minutes can be viewed by the public.

6. Open Data builds trust

When the community can see what’s going on in its government through the access of data, Reichtental said individuals begin to build more trust in their government and feel less like the government is hiding information.”

Linking open data to augmented intelligence and the economy

Open Data Institute and Professor Nigel Shadbolt (@Nigel_Shadbolt) interviewed by by Alex Howard (@digiphile): “…there are some clear learnings. One that I’ve been banging on about recently has been that yes, it really does matter to turn the dial so that governments have a presumption to publish non-personal public data. If you would publish it anyway, under a Freedom of Information request or whatever your local legislative equivalent is, why aren’t you publishing it anyway as open data? That, as a behavioral change. is a big one for many administrations where either the existing workflow or culture is, “Okay, we collect it. We sit on it. We do some analysis on it, and we might give it away piecemeal if people ask for it.” We should construct publication process from the outset to presume to publish openly. That’s still something that we are two or three years away from, working hard with the public sector to work out how to do and how to do properly.

We’ve also learned that in many jurisdictions, the amount of [open data] expertise within administrations and within departments is slight. There just isn’t really the skillset, in many cases. for people to know what it is to publish using technology platforms. So there’s a capability-building piece, too.

One of the most important things is it’s not enough to just put lots and lots of datasets out there. It would be great if the “presumption to publish” meant they were all out there anyway — but when you haven’t got any datasets out there and you’re thinking about where to start, the tough question is to say, “How can I publish data that matters to people?”

The data that matters is revealed in the fact that if we look at the download stats on these various UK, US and other [open data] sites. There’s a very, very distinctive parallel curve. Some datasets are very, very heavily utilized. You suspect they have high utility to many, many people. Many of the others, if they can be found at all, aren’t being used particularly much. That’s not to say that, under that long tail, there isn’t large amounts of use. A particularly arcane open dataset may have exquisite use to a small number of people.

The real truth is that it’s easy to republish your national statistics. It’s much harder to do a serious job on publishing your spending data in detail, publishing police and crime data, publishing educational data, publishing actual overall health performance indicators. These are tough datasets to release. As people are fond of saying, it holds politicians’ feet to the fire. It’s easy to build a site that’s full of stuff — but does the stuff actually matter? And does it have any economic utility?”

there are some clear learnings. One that I’ve been banging on about recently has been that yes, it really does matter to turn the dial so that governments have a presumption to publish non-personal public data. If you would publish it anyway, under a Freedom of Information request or whatever your local legislative equivalent is, why aren’t you publishing it anyway as open data? That, as a behavioral change. is a big one for many administrations where either the existing workflow or culture is, “Okay, we collect it. We sit on it. We do some analysis on it, and we might give it away piecemeal if people ask for it.” We should construct publication process from the outset to presume to publish openly. That’s still something that we are two or three years away from, working hard with the public sector to work out how to do and how to do properly.

We’ve also learned that in many jurisdictions, the amount of [open data] expertise within administrations and within departments is slight. There just isn’t really the skillset, in many cases. for people to know what it is to publish using technology platforms. So there’s a capability-building piece, too.

One of the most important things is it’s not enough to just put lots and lots of datasets out there. It would be great if the “presumption to publish” meant they were all out there anyway — but when you haven’t got any datasets out there and you’re thinking about where to start, the tough question is to say, “How can I publish data that matters to people?”

The data that matters is revealed in the fact that if we look at the download stats on these various UK, US and other [open data] sites. There’s a very, very distinctive parallel curve. Some datasets are very, very heavily utilized. You suspect they have high utility to many, many people. Many of the others, if they can be found at all, aren’t being used particularly much. That’s not to say that, under that long tail, there isn’t large amounts of use. A particularly arcane open dataset may have exquisite use to a small number of people.

The real truth is that it’s easy to republish your national statistics. It’s much harder to do a serious job on publishing your spending data in detail, publishing police and crime data, publishing educational data, publishing actual overall health performance indicators. These are tough datasets to release. As people are fond of saying, it holds politicians’ feet to the fire. It’s easy to build a site that’s full of stuff — but does the stuff actually matter? And does it have any economic utility?

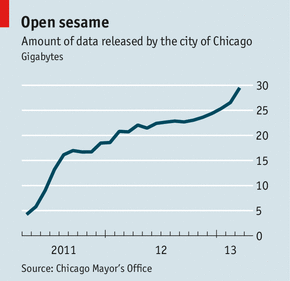

Cities and Data

The Economist: “Many cities around the country find themselves in a similar position: they are accumulating data faster than they know what to do with. One approach is to give them to the public. For example, San Francisco, New York, Philadelphia, Boston and Chicago are or soon will be sharing the grades that health inspectors give to restaurants with an online restaurant directory.

The Economist: “Many cities around the country find themselves in a similar position: they are accumulating data faster than they know what to do with. One approach is to give them to the public. For example, San Francisco, New York, Philadelphia, Boston and Chicago are or soon will be sharing the grades that health inspectors give to restaurants with an online restaurant directory.

Another way of doing it is simply to publish the raw data and hope that others will figure out how to use them. This has been particularly successful in Chicago, where computer nerds have used open data to create many entirely new services. Applications are now available that show which streets have been cleared after a snowfall, what time a bus or train will arrive and how requests to fix potholes are progressing.

New York and Chicago are bringing together data from departments across their respective cities in order to improve decision-making. When a city holds a parade it can combine data on street closures, bus routes, weather patterns, rubbish trucks and emergency calls in real time.”

Open Data and Civil Society

Nick Hurd, UK Minister for Civil Society, on the potential of open data for the third sector in The Guardian:

“Part of the value of civil society is holding power to account, and if this can be underpinned by good quality data, we will have a very powerful tool indeed….The UK is absolutely at the vanguard of the global open data movement, and NGOs have a great sense that this is something they want to play a part in.There is potential to help them do more of what they do, and to do it better, but they’re going to need a lot of help in terms of information and access to events where they can exchange ideas and best practice.”

Also in the article: “The competitive marketplace and bilateral nature of funding awards make this issue perhaps even more significant in the charity sector, and it is in changing attitudes and encouraging this warts-and-all approach that movement leadership bodies such as the Open Data Institute (ODI) will play their biggest role….Joining the ODI in driving and overseeing wider adoption of these practices is the Open Knowledge Foundation (OKFN). One of its first projects was a partnership with an organisation called Publish What You Fund, the aim of which was to release data on the breakdown of funding to sectors and departments in Uganda according to source – government or aid.

…Open data can often take the form of complex databases that need to be interrogated by a data specialist, and many charities simply do not have these technical resources sitting untapped. OKFN is foremost among a number of organisations looking to bridge this gap by training members of the public in data mining and analysis techniques….

“We’re all familiar with the phrase ‘knowledge is power’, and in this case knowledge means insight gained from this newly available data. But data doesn’t turn into insight or knowledge magically. It takes people, it takes skills, it takes tools to become knowledge, data and change.

“We set up the School of Data in partnership with Peer 2 Peer University just over a year and a half ago with the aim of enabling citizens to carry out this process, and what we really want to do is empower charities to use data in the same way”, said Pollock.”

The Value of Open Data – Don’t Measure Growth, Measure Destruction

What do I mean by this?

Take SeeClickFix. Here is a company that, leveraging the Open311 standard, is able to provide many cities with a 311 solution that works pretty much out of the box. 20 years ago, this was a $10 million+ problem for a major city to solve, and wasn’t even something a small city could consider adopting – it was just prohibitively expensive. Today, SeeClickFix takes what was a 7 or 8 digit problem, and makes it a 5 or 6 digit problem. Indeed, I suspect SeeClickFix almost works better in a small to mid-sized government that doesn’t have complex work order software and so can just use SeeClickFix as a general solution. For this part of the market, it has crushed the cost out of implementing a solution.

Another example. And one I’m most excited. Look at CKAN and Socrata. Most people believe these are open data portal solutions. That is a mistake. These are data management companies that happen to have simply made “sharing (or “open”) a core design feature. You know who does data management? SAP. What Socrata and CKAN offer is a way to store, access, share and engage with data previously gathered and held by companies like SAP at a fraction of the cost. A SAP implementation is a 7 or 8 (or god forbid, 9) digit problem. And many city IT managers complain that doing anything with data stored in SAP takes time and it takes money. CKAN and Socrata may have only a fraction of the features, but they are dead simple to use, and make it dead simple to extract and share data. More importantly they make these costly 7 and 8 digital problems potentially become cheap 5 or 6 digit problems.

On the analysis side, again, I do hope there will be big wins – but what I really think open data is going to do is lower the costs of creating lots of small wins – crazy numbers of tiny efficiencies….

Don’t look for the big bang, and don’t measure the growth in spending or new jobs. Rather let’s try to measure the destruction and cumulative impact of a thousand tiny wins. Cause that is where I think we’ll see it most.”